AWS to Azure Connectivity

Several years ago I ran through an exercise of connecting AWS to Azure. At the time, I used an Amazon Linux EC2 instance running OpenSWAN on the AWS side to a virtual gateway on the Azure side. I wanted to try this time just using the managed VPN services on both sides and range find the throughput I could expect between the two.

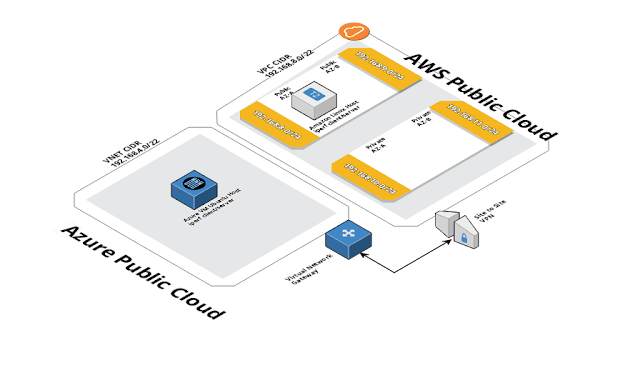

At a high level the diagram looks like this.

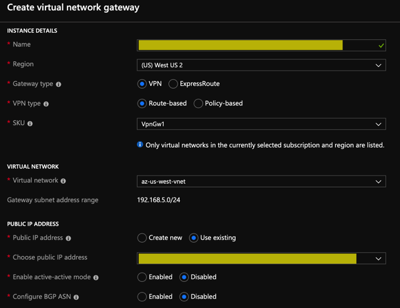

Azure Setup

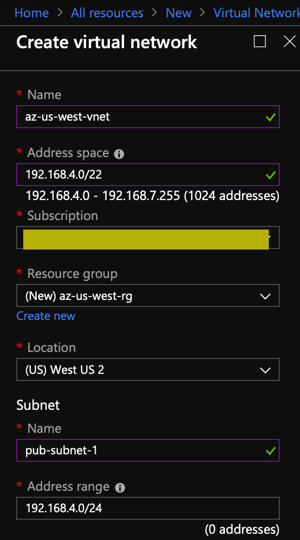

I don’t live in Azure-land very often, so a bit of fumbling through some of the steps required on this side. The desired setup is to create a virtual network address space of 192.168.4.0/22. This CIDR does not overlap my AWS VPC CIDR (192.168.8.0/22).

Will basically perform these steps:

- Create the virtual network on the Azure side

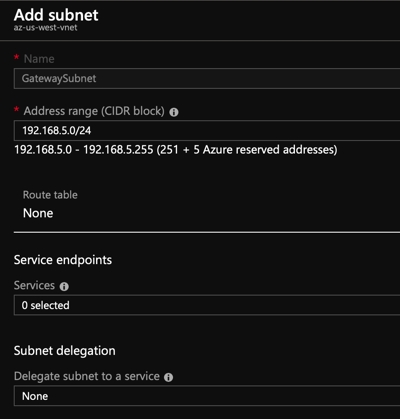

- Add the Gateway Subnet

- Add the rest of the subnets to sort of … mirror my AWS network

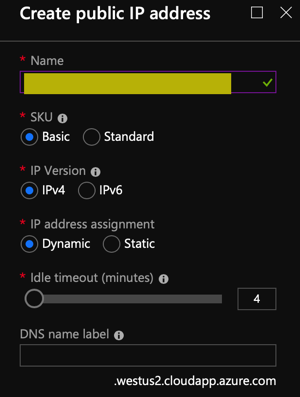

- Create a new public IPv4 Address

- Create a new virtual gateway using the public IPv4 Address above

AWS Configuration

We’ll jump over to AWS to begin some configuration on the AWS side. I won’t go through the initial steps, but we have a simple VPC spread across two different availability zones using a Public/Private subnet in each.

The VPC CIDR / Subnet configuration looks like this:

VPC CIDR: 192.168.8.0/22

Public AZ-A : 192.168.8.0/24

Public AZ-B : 192.168.9.0/24

Private AZ-A : 192.168.10.0/24

Private AZ-B : 192.168.11.0/24

Create a new virtual private gateway and attach it to the VPC:

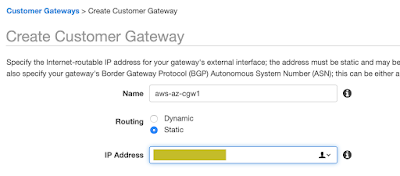

Create a new customer gateway using the public IPv4 address from Azure

Create the VPN connection on the AWS side and add the static IP prefixes for the Azure VNET (192.168.4.0/22)

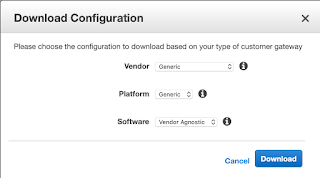

Next download the configuration using the “Generic” option

Within this file you will find the pre-shared key as well as the virtual private gateway IP address.

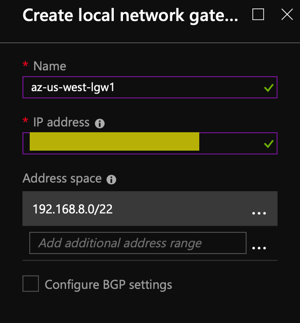

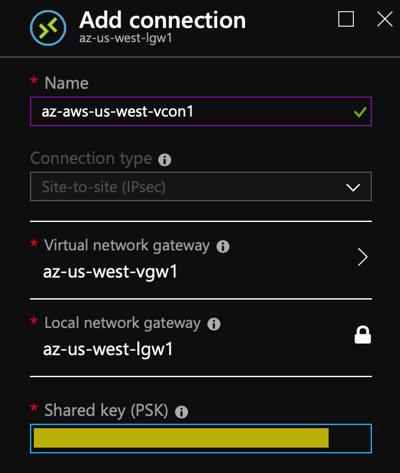

Now do these steps:

- Create a local network gateway on the Azure side using the VPG Public IPv4 Address and the VPC CIDR from AWS.

- Add a connection to the gateway using the pre-shared key from the configuration file.

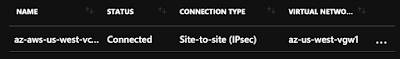

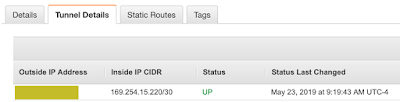

At this point, we should show a connection from both sides.

Just rinse and repeat if you want to setup the second tunnel.

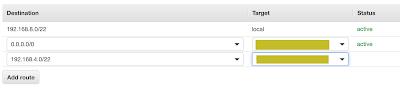

Now add the route to the Azure CIDR into the AWS Route table.

We should be able to ping from both sides now.

AWS to Azure

[ec2-user@ip-192-168-9-54 ~]$ ping 192.168.4.4

PING 192.168.4.4 (192.168.4.4) 56(84) bytes of data.

64 bytes from 192.168.4.4: icmp_seq=1 ttl=64 time=13.3 ms

64 bytes from 192.168.4.4: icmp_seq=2 ttl=64 time=14.6 ms

64 bytes from 192.168.4.4: icmp_seq=3 ttl=64 time=12.7 ms

64 bytes from 192.168.4.4: icmp_seq=4 ttl=64 time=12.9 ms

64 bytes from 192.168.4.4: icmp_seq=5 ttl=64 time=13.0 ms

64 bytes from 192.168.4.4: icmp_seq=6 ttl=64 time=13.0 ms

Azure to AWS

azvm-user@lnxhost2:~$ ping 192.168.9.54

PING 192.168.9.54 (192.168.9.54) 56(84) bytes of data.

64 bytes from 192.168.9.54: icmp_seq=39 ttl=254 time=13.1 ms

64 bytes from 192.168.9.54: icmp_seq=40 ttl=254 time=14.3 ms

64 bytes from 192.168.9.54: icmp_seq=41 ttl=254 time=12.8 ms

64 bytes from 192.168.9.54: icmp_seq=42 ttl=254 time=13.3 ms

64 bytes from 192.168.9.54: icmp_seq=43 ttl=254 time=13.0 ms

64 bytes from 192.168.9.54: icmp_seq=44 ttl=254 time=12.9 ms

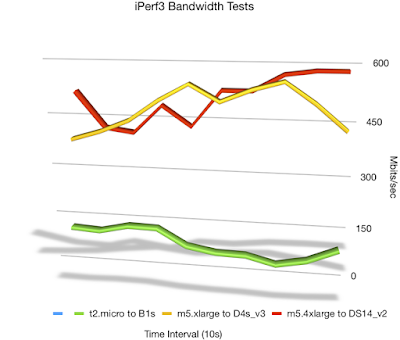

Showing around 13ms of latency between the two with both environments in the us-west-2 region. That’s not too bad, so I decided to run a few iperf3 tests between nodes and see what sort of bandwidth I could see.

Not too bad! Probably not a setup you would use in a production environment, but for POC testing, definitely doable. Hope this is helpful.

Cloud on….