I was recently given a problem set that involved the long term storage of Microsoft SQL backup files on AWS that should be stored using a “Write Once Read Many” (WORM) type of scenario.

Backup Requirements:

The backup storage requirements were given as these:

- Backups should be retained and immutable for a total of 5 years.

- Backups are taken once per day every day and are approximately 1 GB in size.

- Backups older than 5 years are automatically removed from the archives.

I often describe AWS as the greatest LEGO™ set ever! There are an endless set of possibilities to create what you need to solve the problem at hand. There were 4 options off the top of my head that could possibly work (although there certainly could be others).

- EC2 instance with attached EBS volume for backup storage

- Amazon FSx for Windows

- Standard S3 Storage

- Tiered S3 Storage

I almost ruled the first two options out immediately due to the immutable requirement. I haven’t really tried but there may be some way to make the backup files immutable (at least to the user) on a Windows server. As a baseline I decided to look at those options.

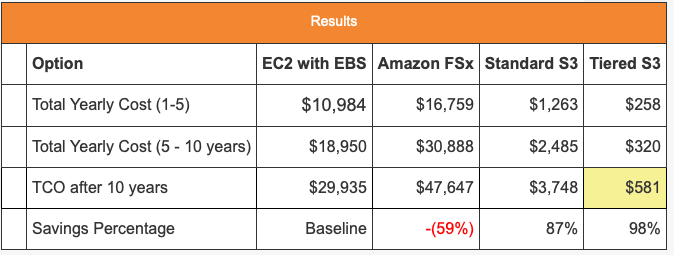

Cost Comparisons:

I did some rough calculations between the four options across a 10 year life span.

Don’t skewer me here. The EC2 cost estimations were done on a t3a.medium instance using on-demand pricing and running continuously. I factored in 30GB EBS and FSx increases each month so that we don’t provision all the storage up front. That works until you hit year 5 and then you are in a steady state. You would use AWS Savings Plans and other measures to continue to reduce costs. What I did want to see was the scale of difference in cost. Clearly S3 is the right LEGO™ piece for this puzzle.

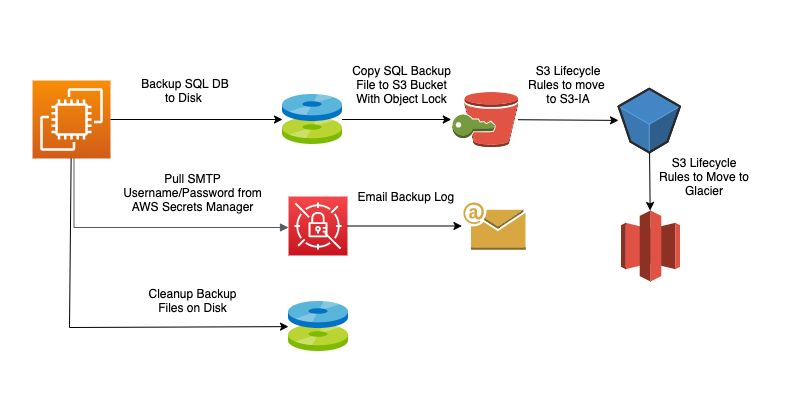

All I needed to was implement something that looked like this.

S3 Lifecycle Rules:

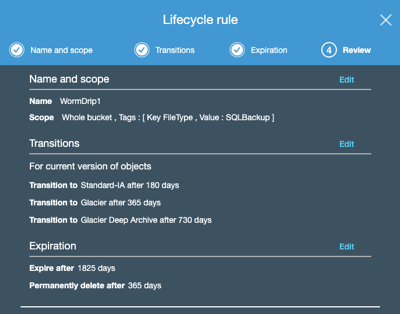

Here is the lifecycle policy that I proposed:

- SQL Backups are object locked for 5 years

- SQL Backups files are stored in Standard S3 for 6 months

- From Standard S3 to S3-A (Infrequent Access) after 6 months

- From S3-IA to S3 Glacier after 1 year

- From S3 Glacier to Glacier Deep Vault after 2 years

- Objects are expired after 5 years.

The S3 Bucket was created with these Lifecycle Rules:

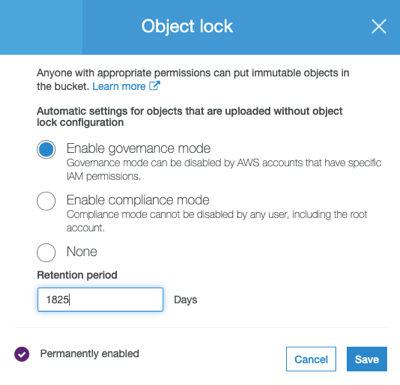

The Object Lock was configured as:

Powershell Script

Now that we have the landing zone for the SQL Backups, we need a Powershell script to drive the process. I tried to write the script modular so that the individual modules can be excluded, enhanced, or added as needed.

Prerequisites:

In the example I used, IAM permissions were needed for the following AWS Services:

- S3

- Secrets Manager (Holds SES uid/pwd)

- SES (Simple Email Service)

The script is below. As always comments and suggestions for improvement are encouraged.

Hope this helps… Cloud On!

“The cloud is an architect’s dream. Prior to the cloud if I screwed something up there was tangible evidence that had to be destroyed. Now it’s just a blip in the bill.” – Mike Spence

<# SQL-2-S3 - Powershell Script to Backup SQL database to S3

Author: Michael Spence (mike@spikebiz.com)

Date: 8-20-2020

#>

# Global Variable Declarations

$DaysOnDisk = "-7" <# Number of Days to keep backup file on Disk #>

$BackupPath = "E:\Backups\" <# Path to store the backups #>

$BackupDate = (Get-Date -f yyyy-MM-dd_HH-mm)

$S3Bucket = "S3BucketName" <# Name of your S3 Bucket #>

$LogFile = $BackupPath + $BackupDate + ".log"

$DBName = "SQLDatabaseName" <# Name of the SQL Database #>

Add-Content -Path $LogFile -Value "Backup Started On: $BackupDate"

#

# Function Definitions

#

# Backup the SQL database(s)

Function Backup {

param (

[Parameter (Mandatory=$true)][String]$Database

)

$BackupFile = $BackupPath + $Database + "-" + $BackupDate + ".bak"

Add-Content -Path $LogFile -Value "Backing up Database: $Database"

Backup-SqlDatabase -ServerInstance "." -Database $Database -BackupFile $BackupFile

S3Copy $BackupFile $BackupDate $S3Bucket "SQLBackup"

}

# Copy file to S3

Function S3Copy {

param (

[Parameter (Mandatory=$true)][String]$FileToCopy,

[Parameter (Mandatory=$true)][String]$S3KeyDate,

[Parameter (Mandatory=$true)][String]$S3BucketName,

[Parameter (Mandatory=$true)][String]$S3Tag

)

# Copy file to S3

$S3Key = "/" + $S3KeyDate + "/" + [System.IO.Path]::GetFileName($FileToCopy)

Write-S3Object -BucketName $S3BucketName -File $FileToCopy -Key $S3Key -TagSet @{Key="FileType";Value=$S3Tag}

Add-Content -Path $LogFile -Value "Copied $FileToCopy to $S3BucketName as $S3Key"

}

# Cleanup Files in the Backup Directory

Function Cleanup {

param (

[Parameter (Mandatory=$true)][String]$Daysback

)

$DaysToDelete = (Get-Date).AddDays($Daysback)

Add-Content -Path $LogFile -Value "Deleting Backups Older Than: $DaysToDelete"

# Cleanup old files on disk

$Files = Get-Childitem $BackupPath -Recurse | Where {$_.LastWriteTime -le "$DaysToDelete"}

foreach ($File in $Files)

{

if ($File -ne $NULL)

{

Add-Content -Path $LogFile -Value "Deleting the file: $File"

Remove-Item $File.FullName

}

else {

Add-Content -Path $LogFile -Value "No files to cleanup"

}

}

}

# Send the Log File out AWS SES

Function SendLog {

param (

[Parameter (Mandatory=$true)][String]$LogToSend

)

#Obtain Amazon SES Credentials from Amazon Secrets Manager

$Region = "us-west-2" <# Your AWS region #>

$SMTPServer = "email-smtp.us-west-2.amazonaws.com" <# Your SES SMTP Server #>

$Secret = (Get-SECSecretValue -SecretId <Secret Name> -Region $Region).SecretString | ConvertFrom-JSON

$Username = ($Secret | Get-Member | Where Membertype -eq "NoteProperty").Name

$Password = ConvertTo-SecureString $Secret.$Username -AsPlainText -Force

$Credential = New-Object System.Management.Automation.PsCredential($Username,$Password)

$from = "email-address" <# SES Verified Email Address #>

$to = "email-address" <# Email to receive Log File #>

$subject = "Backup Log from: " + $LogToSend

$emailbody = "

<h3>Backup LogFile: $LogToSend </h3>

"

$contents = Get-Content -Path $LogToSend

ForEach ($line in $contents) {

$emailbody = $emailbody + "<p> $line </p>"

}

Write-Host "Sending Email via AmazonSES"

Send-MailMessage -from $from -to $to -subject $subject -body $emailbody -bodyasHTML -smtpServer $SMTPServer -credential $Credential -UseSsl -Port 587

Write-Host "Sent"

}

Backup $DBName <# Backup the SQL Databaes #>

Cleanup $DaysOnDisk <# Cleanup the Backup Directory #>

SendLog $LogFile <# Send out the Log File with AWS SES #>

S3Copy $LogFile $BackupDate $S3Bucket "LogFile" <# Add the log file to Bucket #>